Visualizing a Reddit Hug Of Death With R: How To Reddit-Proof Your Website For Pocket Change

Last month, a post about an experiment I wrote showing how atrocious Soma water filters are blew up on Reddit. And with it, blew up my tiny shared $2 a month hosting plan. Thankfully, some kind redditors posted the cached version that made it accessible, but an equal number of unkind redditors chastised me for not being able to handle front page traffic from the 6th most popular site in the world. It did get me thinking, however: How much traffic could a basic self-hosted Wordpress site handle? In this case, obviously not enough–but could I find a cheap (~$5/month) hosting soluton that could handle a Reddit hug-of-death?

The answer: yes.

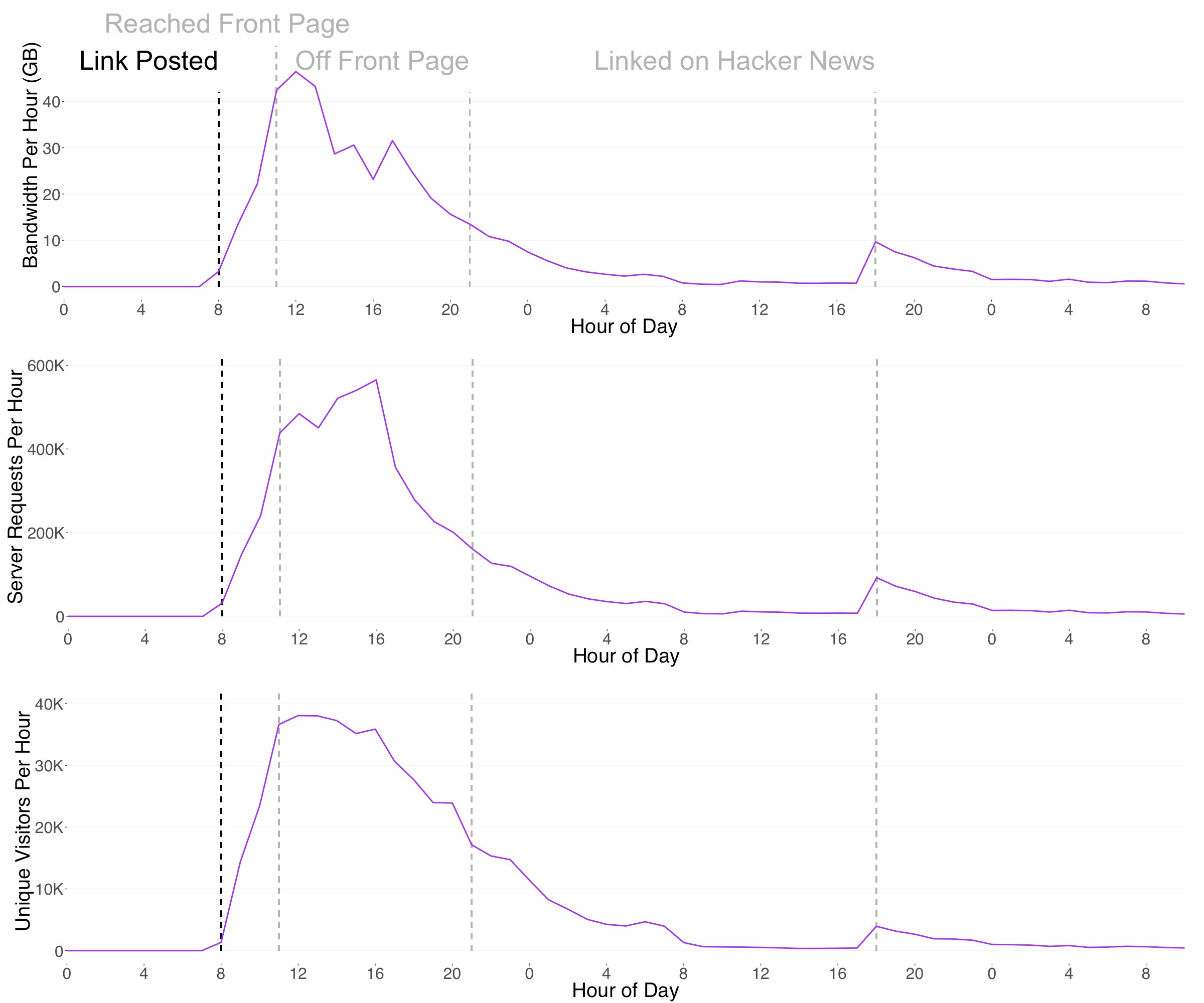

First things first, how much traffic does Reddit actually send you when you’re on the front page? My server crashed so I wasn’t able to get detailed minute-by-minute information, but CloudFlare was able to give me the hourly totals for server requests, unique visitors, and bandwidth.

The peak traffic came at 4:00 PM, during which hour there were 565,310 server requests from 35,870 unique visitors. This works out to an average rate of 157 requests per second and 10 users per second. A request is a browser asking for a piece of data on the page (scripts, images, html), and thus one user will make many requests when they arrive. To give you an idea of the scale, in 2008 Wikipedia handled 50,000 requests per second with 300 servers. The average rate, however, hides the fact that the requests come in randomly and thus this value will fluctuate. Although we don’t have the second-by-second information, we can estimate what it looks like by modeling the user arrivals as a random Poisson process.

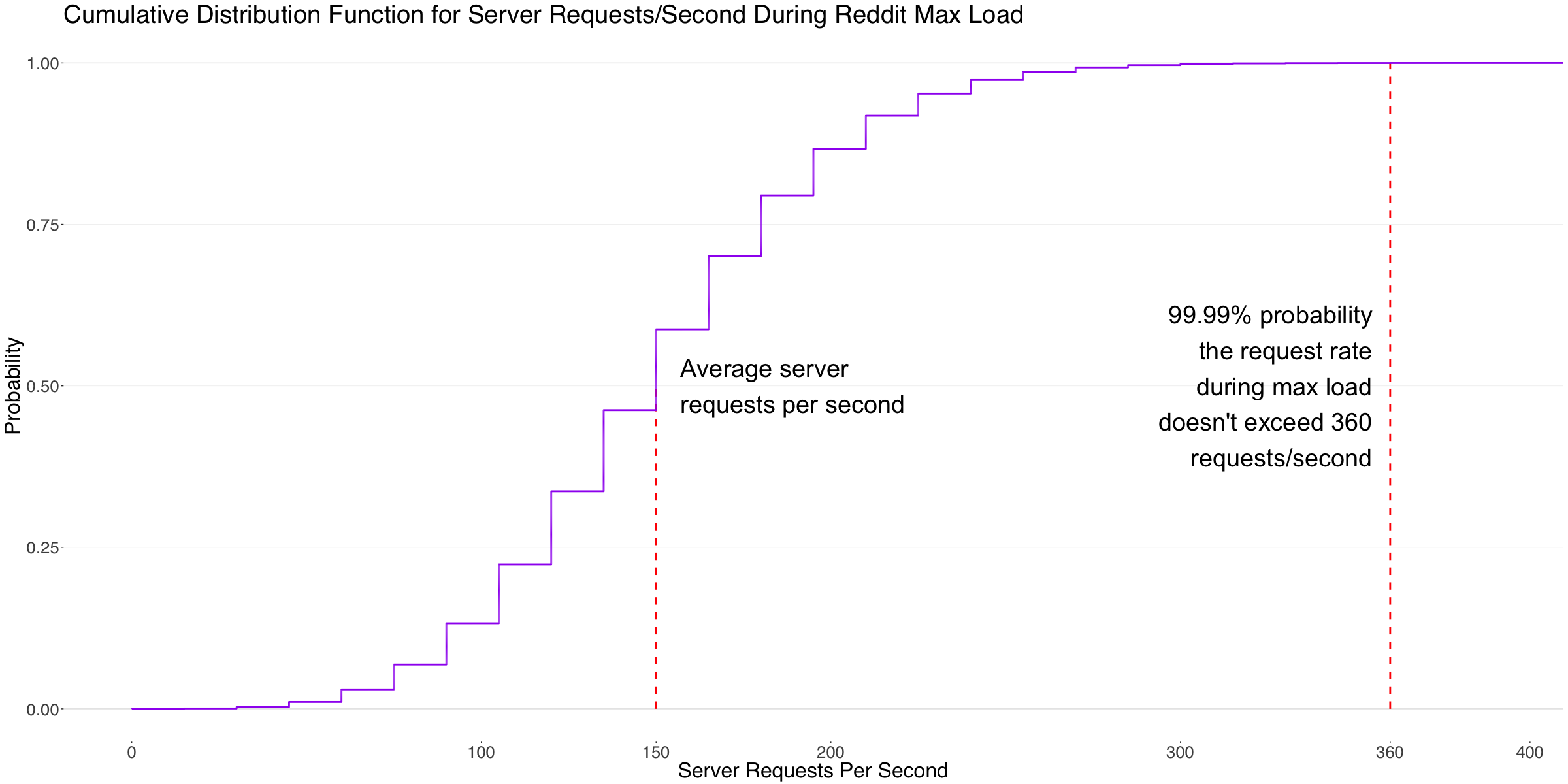

There is only a 0.01% chance of getting more than 360 requests (24 users) per second during peak traffic on a Reddit hug-of-death.

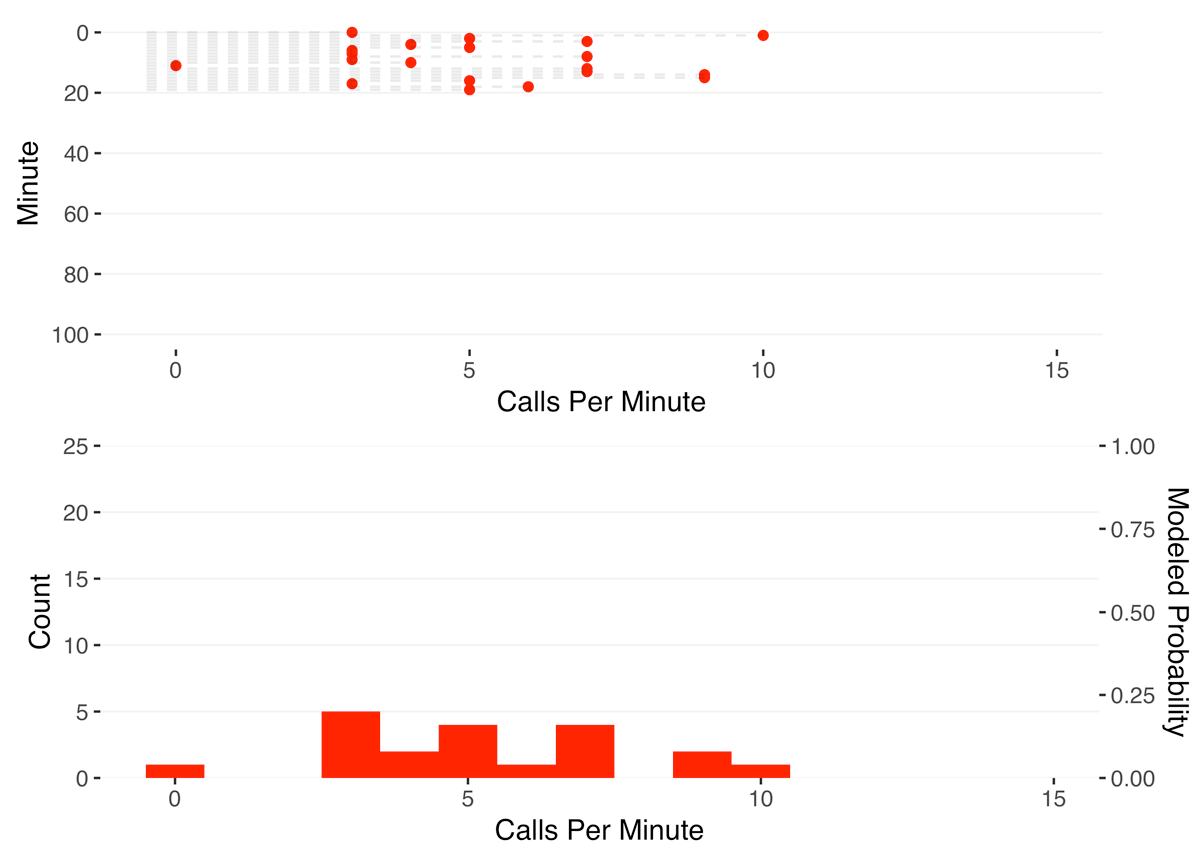

A primer on Poisson processes: One of the canonical examples of a Poisson process is the distribution of calls to a call center, where the average rate is known, but the calls come in at random times. Each call comes in independently from each other call, so there might be stretches where no calls arrive or periods where many calls arrive. The call center would want to model this to determine the minimum staffing required to cover these high-call periods, balancing out their risk of excessive hold times caused by under staffing with the financial cost of adding more customer service agents. By setting their allowable risk (here, we’ll say full coverage 99% of the time), they can look at the cumulative distribution function (CDF) for the Poisson distribution for their average call rate. The CDF gives the probability of receiving a certain number of events or lower per time period. Thus, here it will tell show the maximum number of callers they will expect to receive 99% of the time.

An astute observer would note that, unlike calls to a call center, server requests ARE in fact correlated: a single browser will quickly make many requests to load all of the page assets. Thus, the requests will arrive in bunches from each individual user. The number of requests per user for the page was 15, obtained by dividing the total number of requests by the number of users. Our random event here is thus the user arrival, with the magnitude multiplied by 15 to get the number of server requests. Here, we plot the cumulative distribution function for a Poisson process with an average rate of 10 users (and thus, 150 requests) per second:

There is only a 0.01% chance of getting more than 24 users per second (360 requests) during peak traffic on a Reddit hug-of-death. Any hosting solution that will potentially be exposed to the harsh digital environment of Reddit’s front page should thus, at a minimum, support this rate.

nginx + PHP 7.1 + PHP-FPM = 40+ million visitors per day with zero failures

Now that we know the performance goal, we need to determine how badly the shared hosting performed, and whether there is any other hosting solution out there for a similar price that can handle this load. In terms of cheap hosting solutions, one that repeatedly came up was DigitalOcean. For $5 a month, you get a virtual private server with 1 TB of bandwidth per month, 1 CPU core, and 512 MB of memory. In particular, this series of posts by the blog DeliciousBrains (who do Wordpress plug-in development) claimed amazing performance with the cheapest DigitalOcean server by installing nginx, PHP 7.1, and PHP-FPM (don’t worry about the acronyms) to augment the standard Wordpress install with ultra-fast caching. They showed that a Wordpress site run with this $5 a month set-up could support 40 million visitors per day with zero failures, which works out to about 31 users (470 requests) per second. They didn’t push the test to the point where the server started failing, however. We need to run our own test.

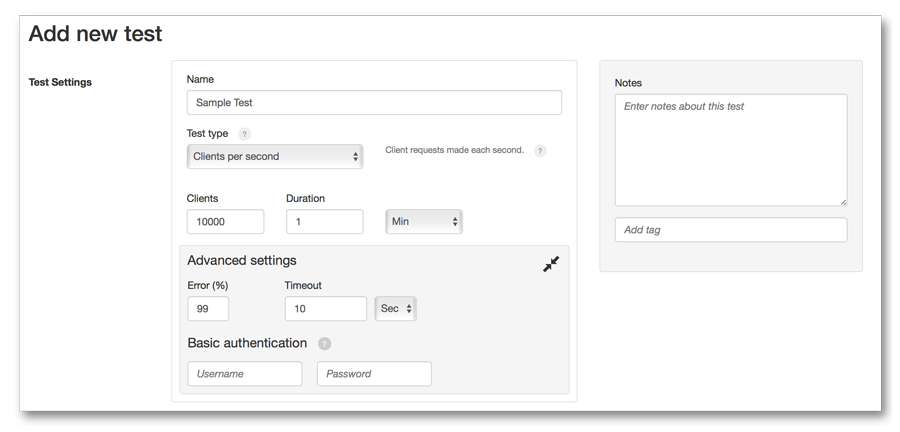

After following DeliciousBrain’s installation instructions and migrating my site over to the new hosting, I compared performance by load testing the different hosting options and seeing when and how badly they failed. There are several load testing services out there, but loader.io is the only one I found that allows you up to 10,000 requests/second on their free tier.

The service sends a set number of requests/second to your website, and records how many were returned successfully and how many produced errors. We can use this data to fit a binomial model that determines the probability of failure as a function of the number of server requests per second.

glm(Failures ~ Users,family="binomial",data=shared,weights=Users) -> fitshared

glm(Failures ~ Users,family="binomial",data=VPSoneCPU,weights=Users) -> fitVPSoneCPU

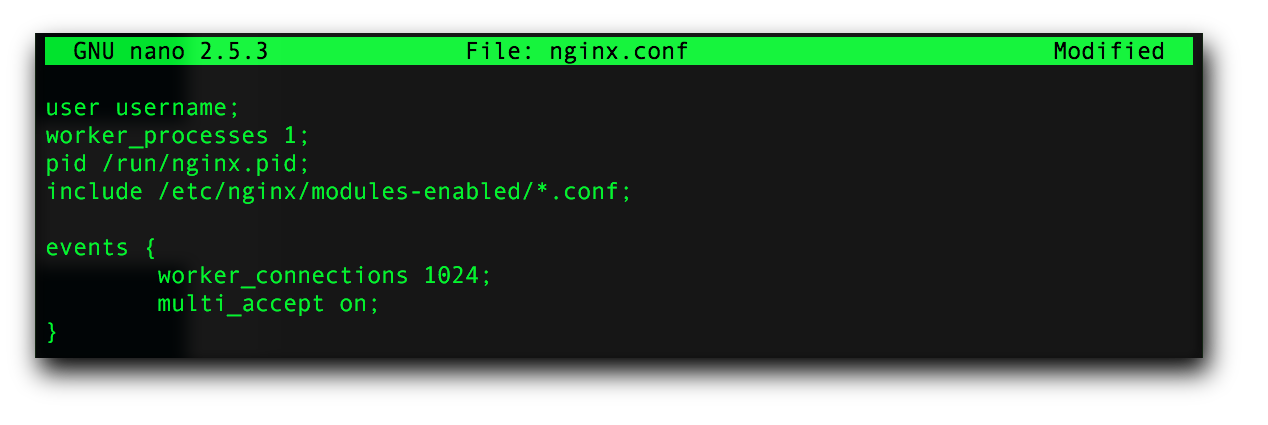

glm(Failures ~ Users,family="binomial",data=VPStwoCPU,weights=Users) -> fitVPStwoCPUI tested across three different conditions: shared hosting, the lowest tier 1 CPU DigitalOcean droplet ($5/month), and the 2 CPU DigitalOcean droplet ($20/month). Adding another CPU enables you to serve more requests/second, so the second tier DigitalOcean droplet ($10/month) that only adds more memory won’t help serve more visitors. The only changes to the server to scale up the VPS was to go into nginx.conf file (which is easy to do if you follow DeliciousBrains’ set-up instructions) and set the number of available CPUs from 1 to 2. Should the need arise, you can vertically scale your website to handle double the load by changing this one number.

Here are the results (on a log scale, because the performance difference is so large), with the binomial model fit to the data:

The cPanel shared hosting solution begins to fail as low as 8 requests/second, while the lowest tier DigitalOcean droplet doesn’t start failing until more than 1000 requests/second. This matches our nginx server set-up (see Figure 5), where we allocated 1024 connections per CPU. Comparing the probability curves, the base VPS performs 153x better, at 2.5x the cost. Pretty impressive for $5 per month. Set up correctly, even the lowest tier VPS can handle Reddit’s front page.

Set up correctly, even the lowest tier DigitalOcean VPS can handle Reddit’s front page.

Doubling the number of CPUs on the VPS shifts the distribution over by another 1000 requests/second, which matches what we might have guessed by doubling the number of supported connections to 2048. If your blog traffic is like mine and comes mainly when you release new posts and then falls off to a low but steady trickle, it might make sense to scale your website to this higher tier for the day you release your post. Since DigitalOcean only charges by the hour, you can do this coinciding with expected traffic bumps and only pay for the day or two at the higher rate (which comes out to less than a dollar).

Overall, this shows that if you run a relatively static Wordpress site, you can cheaply support a huge number of visitors for the cost of a single piece of avocado toast. Performance should be even better if you use a content delivery network (CDN) to serve your media. Out of the 5.9 million requests received through its trip through Reddit, only 20% were actually served by my server; the vast majority of requests were files served by the free CDN, Cloudflare. In effect, this allows me 5x the possible request rate (here, 5000 requests/second, translating to about 13 billion requests/month, or slightly under a billion users) as well as the added benefit of saving you most of your server bandwidth costs. For $5 a month. And as an added bonus, Cloudflare will automatically provide a cached version should it fail.

Which, if I’m right, it shouldn’t.

If you want to spin up your own super-robust blog, sign up (signing up through this link gets you a $10 credit, and me a $25 credit if you end up spending $25 with them) for the lowest tier DigitalOcean’s droplet and follow DeliciousBrains’ guide for easy installation instructions.